Root Causes of Data Quality Issues: Understand Them to Act Better

Understanding the real causes of data quality problems - and finding lasting solutions

Why data remains flawed despite tools, projects and intentions

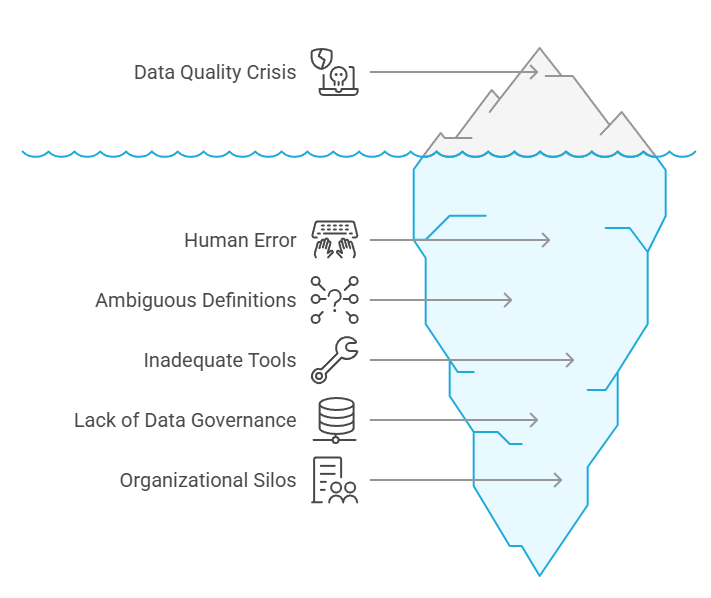

In many organizations, data errors seem inevitable. We clean up, we correct, we audit... but they keep coming back. Dashboards lose reliability. Teams doubt their numbers. AI projects fail at scale. The cause? A persistent confusion between the symptom and the root of the problem.

We attack the visible effects of duplicates, missing values and inconsistencies, without ever solving what causes them.

A problem at the crossroads of people, tools and organization

Human error is the most frequent source of error. Manual data entry, done in a hurry, produces anomalies that no one detects. Teams enter incomplete names, use different formats or fill in critical fields incorrectly. And very often, a lack of data culture prevents us from measuring the consequences. Add to this theambiguity of definitions: the same indicator (e.g. "active customer") varies from one department to another.

But the tools don't help. Some systems, still indispensable, have become veritable "black boxes". Difficult to integrate, impossible to audit, they generate discrepancies with every export. Automated controls are either too few in number or poorly placed. And in many cases, data flows are neither monitored nor traced. A piece of data can go through five stages of transformation without anyone being able to explain its origin.

The lack of concrete governance makes the situation even worse.

Without defined rules, shared standards or supervision over time, quality deteriorates quietly. We discover it when it's already too late: false reporting, biased models, failed audits.

And above all, organization. Silos slow down exchanges, departments pass the buck, projects move forward without coordination. Without a strong sponsor or a dedicated budget, data quality remains the concern of a few isolated individuals.

A problem at the crossroads of people, tools and organization

Even mature companies come up against causes beyond their control. Third-party databases, customer data entered via forms or partner files contain their own flaws: typos, obsolete codes, missing values. And regulatory developments (RGPD, CSRD, e-invoicing...) impose new requirements that make previously tolerated problems visible.

👉 Reliability cannot be decreed: it must be built and maintained.

What needs to change: less correction, better governance

What many organizations lack is not just another tool. It's a coherent approach, combining automation, shared responsibility, documented rules and steering over time.

It's not about aiming for abstract perfection, but about making data manageable. This requires :

- visible governance, with roles and rules known to all,

- integrated validations as soon as data is entered into systems,

- traceability of each transformation,

- the ability to detect degradations over time and correct them without depending on obscure scripts.

Reliable data is an asset that circulates, sheds light and doesn't betray.

🎯 What your company gains

When root causes are addressed at source, everything improves:

- Decisions are no longer based on assumptions.

- AI and BI projects don't collapse at scale.

- Compliance becomes a reflex, not a stress.

- And above all, businesses are regaining confidence in the data they use.

Data quality is no longer a line in a project table. It becomes a performance driver.

Want to see things more clearly?

Do you notice errors but don't really know where they're coming from?

Do you suspect systemic causes but don't yet have the means to document them?

📅 Book an appointment with a Tale of Data expert to establish a structured, clear and actionable diagnosis.

👉 Request a demo

You May Also Like

These Related Stories

Tale of data vs Excel: which tool should you use to prepare your data?

How do you design the ergonomics of a solution to make it accessible to all?